TLDR;

So you want to get your NVIDIA GPU working inside a LXC container on your proxmox host.

I followed these guides from Digital Space port;

https://www.youtube.com/watch?v=lNGNRIJ708k

https://digitalspaceport.com/proxmox-lxc-gpu-passthru-setup-guide/

- install some foundational packages

- install NVIDIA drivers on the Proxmox host

- review the cgroup2 ID’s for the GPU

- create the LXC container and update the

.conf file in /etc/pve/lxc - copy the NVIDIA driver to the container and install (with –no-kernel-modules)

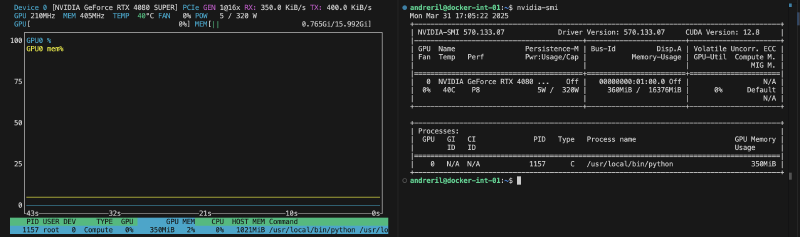

- test you can run “nvtop” of “nvidia-smi” from the LXC container

- install the NVIDIA container toolkit

- enable no-cgroups in NVIDIA container toolkit

- install Docker

- configure the NVIDIA-ctk runtime for Docker

- Reboot and check everything works.

- optional you might need to use the “nvidia-persistenced” platform.

https://gist.github.com/ngoc-minh-do/fcf0a01564ece8be3990d774386b5d0c#enable-persistence-mode - Troubleshooting - https://medium.com/@PlanB./troubleshooting-gpu-passthrough-in-proxmox-common-pitfalls-and-fixes-9124c0182524

Here’s my steps from the above TLDR

Base Proxmox build prep

-

Follow guide here https://digitalspaceport.com/proxmox-lxc-gpu-passthru-setup-guide/

-

Get NVIDIA drivers https://www.nvidia.com/en-us/drivers

-

Install driver

|

|

- Check

|

|

|

|

UNFINISHED BELOW

- Configure a container

Start a container

Terraform

Append to LXC.conf file lxc.cgroup2.devices.allow: c 195:* rwm lxc.cgroup2.devices.allow: c 234:* rwm lxc.cgroup2.devices.allow: c 509:* rwm lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file lxc.mount.entry: /dev/nvidia-caps/nvidia-cap1 dev/nvidia-caps/nvidia-cap1 none bind,optional,create=file lxc.mount.entry: /dev/nvidia-caps/nvidia-cap2 dev/nvidia-caps/nvidia-cap2 none bind,optional,create=file

pct push 105 NVIDIA-Linux-x86_64-550.107.02.run /root/NVIDIA-Linux-x86_64-550.107.02.run

Within container

./NVIDIA-Linux-x86_64-550.107.02.run –no-kernel-modules

apt install gpg curl curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | gpg –dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg && curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed ’s#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g’ | tee /etc/apt/sources.list.d/nvidia-container-toolkit.list apt update apt install nvidia-container-toolkit

nano /etc/nvidia-container-runtime/config.toml #no-cgroups = false to no-cgroups = true

apt install ca-certificates apt update apt install ca-certificates install -m 0755 -d /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/debian/gpg | gpg –dearmor -o /etc/apt/trusted.gpg.d/docker.gpg chmod a+r /etc/apt/trusted.gpg.d/docker.gpg echo “deb [arch=$(dpkg –print-architecture) signed-by=/etc/apt/trusted.gpg.d/docker.gpg] https://download.docker.com/linux/debian bookworm stable” | tee /etc/apt/sources.list.d/docker.list > /dev/null apt update apt install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

nvidia-ctk runtime configure –runtime=docker